vSAN Introduction

What is vSAN?

This is a first post in a series on vSAN, VMware’s software-defined-storage offering. vSAN uses disks within the ESXi hosts to create a resilient scalable shared storage platform for the virtual infrastructure providing many of the features of SAN/NAS shared storage without the need for additional hardware. There are also operational, performance, and scaling benefits associated with integrating the storage into the hypervisor’s control.

Unlike some other software-defined-storage solutions, vSAN is not a separate appliance but instead is baked into the ESXi hypervisor. In fact there is no separate install; installation (which I’ll cover in a future post) is simply a case of applying a license and configuring the cluster through vCenter.

vSAN dates back to 2013, with a General-Availability launch in March 2014 (the first was version 5.5 which was part of ESXi 5.5U1) and has evolved to the current version- v6.6 at the point this post was written.

How does it work?

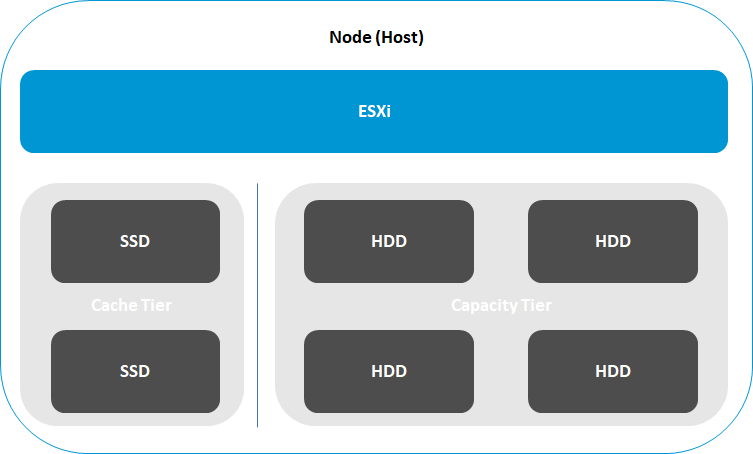

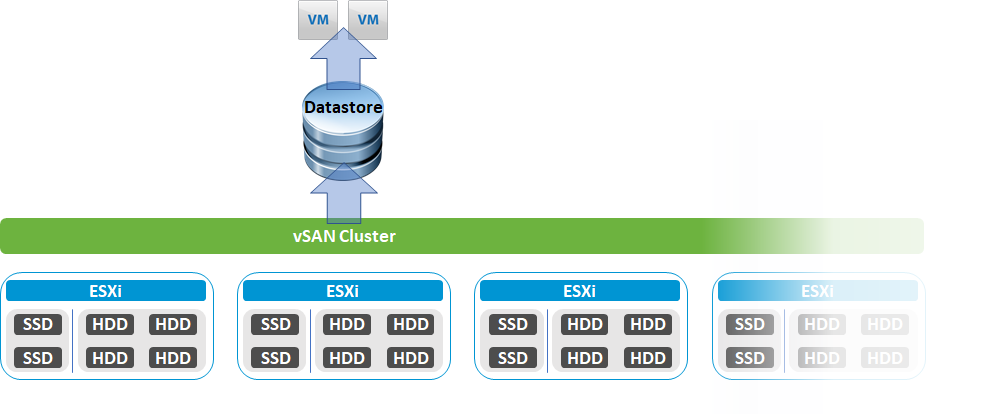

The vSAN infrastructure is built up of normal x86 servers – often referred to as nodes – running the ESXi hypervisor. The disks in each host will be allocated to one of the two tiers of storage- Cache or Capacity. There are two configuration paths here, vSAN can be deployed using a hybrid model where the cache tier is flash disks and the capacity tier uses spinning disks (HDD) or an “All-Flash” model where flash storage is used for both tiers.

These hosts combine to make a cluster – a vSAN Cluster aligns with the clusters in a vSphere Datacentre – and the disks across the member nodes are amalgamated into a vSphere datastore which can then be populated with VMs like any other VMware datastore.

Data on this single datastore can be protected across the multiple hosts to provide resilience- the most basic example would be a RAID-1 equivalent mirroring whereby two copies of each piece of data are stored and on separate hosts. This creates the situation whereby a host can be taken down for maintenance, or suffer a failure, and the data is still available to vSphere. If the affected VM(s) were running on the host that failed then the regular vSphere HA/FT functionality would ensure the VM was kept running (or restarted on a new host in the case of HA). More advanced protection using methodology similar to RAID 5 or 6 is also available- and I’m planning a later post to cover this.

A cluster can be extending by scaling out, adding new nodes to the cluster to increase capacity/ resilience/ flexibility. The hyper-converged nature of vSAN means that these building blocks expand compute power in line with storage capacity. This model has particular advantages in solutions such as VDI where a virtual infrastructure can be expanded in a predictable fashion based on the number of users/desktops being provisioned.

What do I need?

As a minimum you need three ESXi hosts for a vSAN cluster, and four nodes are recommended for a production setup to allow for continued resilience during scheduled maintenance. 2-node ROBO and even 1-node lab clusters are technically possible, but those specific use cases are outside the scope of this introduction.

Software wise, you’ll need vCenter, vSphere licenses for those ESXi hosts, and vSAN licenses as well. vSAN is licensed per-socket in the same way vSphere is, so whilst it is an additional cost, it’s a consistent model that scales with the size of the environment. I’ll go into more detail on this and the various editions available in another post.

One of the most common points of confusion I’ve seen amongst perspective users (primarily those looking to deploy to a small environment or homelab) is this need for compatible hardware. Although vSAN does simplify storage operations, it requires a solid, reliable, certified hardware base to build on. Repurposing existing hardware, or slapping some SSDs on an old controller, is unlikely to end well without plenty of research and a certain level of expertise. If anyone reading this is planning on building something up in a homelab to see what vSAN looks like, I’d thoroughly recommend looking at the VMware Hands-On-Labs (in particular HOL-1808-01-HCI - vSAN v6.6 - Getting Started) which allow you to do this for free and without worrying about providing the hardware.

One additional recommendation is that all hosts in the cluster have the same specification as this helps ensure consistent performance. This also avoids availability issues which may occur if a node with a larger capacity than the others fails or requires maintenance- having the same size nodes would help ensure that there is sufficient capacity to tolerate this failure and reduce complication.

Finally, you will need 10G network connectivity between the hosts for the vSAN traffic.

Summary

TL; DR

vSAN is a software defined storage platform from VMware which is integrated into vSphere, it uses disks from multiple ESXi hosts to deliver a resilient datastore. To get started you will need ideally to invest in four or more hosts, connected by 10G network and each equipped with vSphere and vSAN licensing and two tiers of disk- cache and capacity. The easiest way of ensuring these hosts have tried and tested hardware is to purchase ”vSAN ReadyNodes” from your server supplier.

Future posts in this series will cover vSAN licensing, installation, and aspects of operation.

Further Reading: https://www.vmware.com/products/vsan.html